2 min read

So another brutal round of finals have come and gone at UConn. Rather than relax and enjoy my brief week of freedom before packing up and leaving on my next adventure, I tried scrambling to get the first third of my thesis working. Although HORNET hasn't melted down (yet) the grad students and professors who also have access flooded it with jobs the first few days after finals now that the semester's over. Initially I had thought the cluster was down for the summer which resulted in a concerned email from one of my thesis advisors to whoever's in charge of the cluster -- not good.

Summary of how this third of the thesis is going so far:

Fortunately, I figured out how that there were more than just the standard and priority queues in the cluster -- the standard one being the one I needed to submit my jobs. Using some nodes in the sandy bridge queue ended up being the fix although now a sizable amount of nodes on the standard queue have been reopened with the other users seeming to have finished the majority of the work they needed to get done for whatever research they're doing.

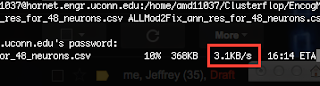

Unfortunately, even with all the free time to improve the pattern recognition part of this thesis through better filtering of the signal has been inconclusive. After numerous trials, tweaking, and an insane amount of hours training countless ANNs and SVMs, the correlation between the output and expected value of either algorithm was very minimal. Even just moving the files in and out of the cluster took literally hours. I have arguably the worst internet around considering we still use DSL and these files are so large that my thesis advisor is considering this to be actual "big data".

GOTTA GO FAST

That's only a result file, some of my input files are well over 100 MB

At one point I was able to get the correlation up to about 0.41 on the ANN (the closer to 1 the better, whereas 0 means no correlation). However, I was unable to repeat this because ANNs, by implementation, are randomized algorithms. 0.35 seems to be the limit of the repeatable correlation I can get but for some reason it's dropped back down to around 0.18 for both despite several filtering techniques I'm implementing. The more I try to fix it, the worse the correlation.

Since I'm leaving to start my new job at Google tomorrow, this part of the thesis will be put on hold... for now.